By. Santosh Srinivasaiah (Diaconia) and Sean Anthony Guillory (MAD Warfare, BetBreakingNews)

Information professionals today have access to a growing set of analytical tools designed to map, measure, and visualize the information environment. From network analysis and cognitive terrain mapping to sentiment tracking and narrative diffusion models, these tools have significantly improved our ability to describe what is happening across the digital battlespace.

Yet the field’s most difficult goal remains unsolved: achieving predictive and warning capability. Most systems still operate in a reactive mode of detecting manipulation or instability only after it becomes visible. Analysts can identify coordinated inauthentic behavior, platforms can remove content, and policymakers can issue responses but by the time such actions occur, the underlying instability has already taken root.

This paper argues that the next step in advancing information operations and cognitive defense lies in borrowing from complexity science. Building on the foundation introduced to this community by Brian Russell and John Bicknell’s work on entropy and system behavior (Bicknell & Russell, 2023) and expanded through cognitive terrain mapping (Bicknell & Andros, 2024), we propose a specific application: Lyapunov stability analysis.

Lyapunov stability offers a quantitative framework for measuring when an information system is losing resilience when small perturbations begin to cause disproportionately large effects. Rather than reacting to chaos after it has appeared, this approach provides a mathematical means of identifying when discourse is approaching a tipping point.

1. The Fight for Cognitive Terrain

Modern influence operations exploit a fundamental property of complex adaptive systems: nonlinear sensitivity. A small, well-timed perturbation can trigger a disproportionate response. In social media ecosystems, that perturbation might be a single narrative injection, a set of bot amplifications, or a targeted engagement campaign that shifts attention and sentiment cascades (Starbird, 2019).

Yet our detection systems are built on linear logic of looking for cause-and-effect patterns rather than emergent feedback loops. Engagement metrics, sentiment scores, and network centrality measures describe what has happened, not how close the system is to a tipping point.

National security analysts recognize this dynamic in other domains. In counterinsurgency or gray-zone operations, instability often grows beneath the surface long before violence erupts. Cognitive warfare follows the same logic: the adversary seeks to erode system stability, not simply to spread messages.

What’s missing is a reliable early-warning signal for when that stability is being lost.

2. Complexity Science and the Cognitive Domain

Complexity science studies systems composed of many interacting parts that adapt to one another over time. Such systems (e.g. weather patterns, ecosystems, economies, and online communities) exhibit emergent behavior and nonlinear dynamics.

Entropy as a Measure of Disorder

Bicknell and Russell (2023) introduced entropy as a key indicator of information system health in The Coin of the Realm. In physics, entropy measures disorder; in information systems, it captures the variety and unpredictability of message flows. High informational entropy implies a noisy environment where users cannot distinguish signal from manipulation.

They proposed that monitoring entropy could reveal when an information environment becomes exploitable. As entropy rises, audiences lose the ability to filter noise, creating conditions for influence operations to succeed. Entropy, in this sense, becomes an early indicator of cognitive vulnerability.

Cognitive Terrain Mapping

Building on that, Bicknell and Andros (2024) proposed Cognitive Terrain Mapping, which is a complexity-based visualization method that tracks real-time sentiment, narrative flows, and community fragmentation. Like a topographical map of human discourse, it shows where trust is eroding and where adversaries could infiltrate.

These approaches advance our descriptive understanding of the cognitive domain. They help analysts see the landscape but still fall short of telling us when instability will occur. Entropy measures current disorder; cognitive terrain maps visualize ongoing motion. What both lack is a temporal forecast, a mathematical measure of how close the system is to a critical transition.

That is precisely where Lyapunov analysis fits in.

3. The Lyapunov Advantage

From Chaos Theory to Prediction

Lyapunov stability, developed in the late 19th century by Russian mathematician Aleksandr Lyapunov, quantifies how a system responds to small perturbations. In essence, it measures the rate at which nearby trajectories in a system’s state space diverge or converge.

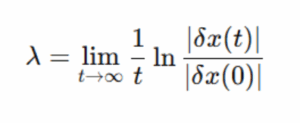

The key parameter is the Lyapunov exponent (λ):

where δx(t) is the distance between two system trajectories over time.

- If λ < 0, trajectories converge: the system is stable.

- If λ = 0, the system is at equilibrium or periodic.

- If λ > 0, trajectories diverge exponentially: the system is chaotic or unstable.

In plain terms, Lyapunov exponents measure how fast small disturbances grow.

Cross-Domain Proof

Lyapunov methods have proven effective in diverse fields:

- Climate science: Detecting when atmospheric systems approach tipping points leading to abrupt weather shifts (Legras & Vautard, 1996).

- Finance: Identifying pre-crash instability in asset markets by quantifying divergence in price dynamics (Peters, 1994).

- Neuroscience: Forecasting epileptic seizures by observing when neural oscillations lose stability (Srinivasaiah, 2025b).

Srinivasaiah (2025a) demonstrates that ca

lculating Lyapunov exponents from time-series data can reveal when systems are about to transition from order to chaos. In EEG research, rising Lyapunov exponents indicate the brain is approaching a stress or seizure state that is detectable before visible symptoms appear.

The analogy to social systems is direct: discourse behaves like a living network. Before collapse into manipulated chaos, its internal coherence erodes in measurable ways.

4. Applying Lyapunov Sta

bility to the Information Environment

Imagine online discourse as a dynamic system evolving in time. Each post, comment, and interaction nudges the system slightly, changing its overall state. When discourse is stable, small provocations fade quickly; when unstable, they spread unpredictably.

A Lyapunov-based early-warning system would quantify this sensitivity by measuring whether a community is resilient or on the edge of c

haos.

Step 1: Model the System

Map a discourse network: users as nodes, interactions (shares, replies, mentions) as edges. Track time-series variables such as:

- Network connectivity and clustering

- Sentiment or emotional valence

- Topic frequency and velocity

- Actor coordination patterns

Together, these describe the system’s evolving state vector x(t).

Step 2: Compute Local Stability

Calculate the largest Lyapunov exponent for these time-series features. An increasing positive λ signals growing instability (i.e. the system is becoming hyp

ersensitive to perturbations).

For example, if sentiment variance and cross-community echoing both spike simultaneously, λ might turn positive, suggesting the discourse has entered a chaotic regime.

Step 3: Establish Thresholds and Alerts

Analysts could define operational thresholds (e.g., λ exceeding 0.05 for a sustained period) as cognitive warning indicators akin to how seismologists flag increasing ground oscillations before earthquakes.

When instability is detected, platforms or i

nformation operations (IO) teams could:

- Slow algorithmic amplification in affected topics

- Increase human moderation review

- Deploy counter-messaging or inoculation campaigns

- Redirect fact-checking resources

These actions don’t require identifying the manipulator; they simply respond to measured instability.

Step 4: Explainability and Human Decision Support

For IO practitioners, the key value is explainable mathematics. Instead of opaque “AI black boxes,” analysts see clear metrics:

- “This topic’s stability dropped 20% in the last six hours.”

- “This community’s Lyapunov index turned positive at 0800Z.”

Just as radar operators watch for anomalous returns, cognitive defense analysts could monitor stability dashboards fed by continuous Lyapunov analysis of discourse signals.

5. Integrating Lyapunov Analysis into Cognitive Defense

The defense community has long relied on indicators and warnings (I&W) to anticipate adversary actions for missile launches, troop mobilizations, and c

yber intrusions. Information and Cognitive Warfare operations deserve the same rigor.

A Lyapunov-based cognitive early warning system would function as an I&W layer for the information domain: measuring not content but stability. It could be implemented through a tiered process:

- Baseline Stability Mapping – Establish normal fluctuation ranges for online communities.

- Dynamic Monitoring – Continuously compute λ across time windows (e.g., hourly).

- Instability Detection – Flag when λ crosses pre-defined thresholds.

- Attribution Fusion – Combine with OSINT or HUMINT indicators to assess whether instability is organic or adversarial.

Such systems would not replace human judgment but enhance it by giving analysts a quantifiable “sixth sense” for cognitive terrain shifts.

Distinguishing Organic vs. Adversarial Chaos

A common objection is that online discourse is inherently chaotic. But natural volatility has patterns: it oscillates within bounded ranges. Adversarial manipulation tends to produce structured chaos with coordinated amplification, synchronized sentiment spikes, and anomalous diffusion rates.

When combined with network metadata, Lyapuno

v indicators can separate these patterns statistically. An organic protest movement may show temporary λ spikes that quickly normalize; a coordinated disinformation surge sustains positive λ longer and across multiple sub-communities.

Ethics and Oversight

Predictive analytics in the cognitive domain must be bound by strict ethical standards. Early-warning data should focus on system stability, not individual behavior. Privacy-preserving computation (e.g., differential privacy) and transparent oversight mechanisms are essential to prevent misuse (Taddeo, 2021).

The mathematics can remain neutral; how institutions act upon it must remain accountable.

6. Operational and Rese

arch Pathways

The path forward for Lyapunov-based models for cognitive security involves:

- Simulation and Validation – Run controlled agent-based simulations of online discourse with embedded manipulators to test whether Lyapunov exponents rise before disruption.

- Historical Case Studies – Apply the model retrospectively to known campaigns (e.g., election interference, pandemic misinformation).

- Real-Time Pilots – Deploy limited monitoring on live platforms to refine thresholds and false-alarm rates.

- Integration with Platform Governance – Couple early-warning data with moderation or counter-messaging workflows.

- Ethical and Policy Frameworks – Publish open methodologies to ensure transparency and public trust.

The outcome would be something like a Cognitive Stability Index (CSI) that would be a standardized measure akin to a “cognitive DEFCON level.” Governments, militaries, and civil organizations could track it much as they monitor cyber th

reat levels or epidemiological curves.

7. Conclusion: Measuring the Edge of Chaos

The future of cognitive security will not hinge on faster censorship or louder counter-narratives. It will depend on our ability to measure, in real time, when the discourse itself is losing equilibrium.

Lyapunov stability provides that metric. It shifts defense from reactive moderation to predictive maintenance of informational integrity. By treating social media as a dynamical system rather than a content feed, we can anticipate manipul

ation before it metastasizes.

Just as weather forecasters predict storms by modeling atmospheric instability, information professionals can forecast influence storms by modeling cognitive instability. The physics of chaos does not stop at neurons or markets; it applies equally to minds in networks.

Our challenge now is institutional, not mathematical: to build the data pipelines, analytical discipline, and ethical guardrails necessary to make Lyapunov-based early warning a pillar of 21st-century cognitive defense.

References

Bicknell, J., & Andros, C. (2024). Cognitive terrain mapping. Information Professionals Association.

Bicknell, J., & Russell, B. (2023). The coin of the realm: Understanding and predicting relative system behavior. Information Professionals Association.

Legras, B., & Vautard, R. (1996). A guide to Lyapunov vectors. In Predictability of Weather and Climate (pp. 135–158). Cambridge University Press.

Peters, E. (1994). Fractal market analysis: Applying chaos theory to investment and economics. Wiley.

Srinivasaiah, S. (2025a). Chaos systems and Lyapunov models. Medium.

Srinivasaiah, S. (2025b). Decoding the electric symphony: Chaos and order in brain dynamics. Medium.

Starbird, K. (2019). Disinformation’s spread: Bots, trolls, and all of us. Nature, 571(7766), 449.

Taddeo, M. (2021). Ethics of digital intelligence and cognitive warfare. Philosophy & Technology, 34(4), 877–890.